dbt → Cube.js → Superset: Automating Semantic Layer & BI Sync

A fully automated workflow for generating semantic layers and publishing them to BI platforms.

Introduction

Rebuilding the same semantic layer over and over across different BI tools has always felt… unnecessarily painful. Most dashboards still reach directly into databases, each with their own idea of what a “metric” is, so you end up maintaining the same logic in three or four places. Even tools built to solve this – like Cube.js – don’t really solve the end-to-end problem of getting consistent metrics into your BI layer.

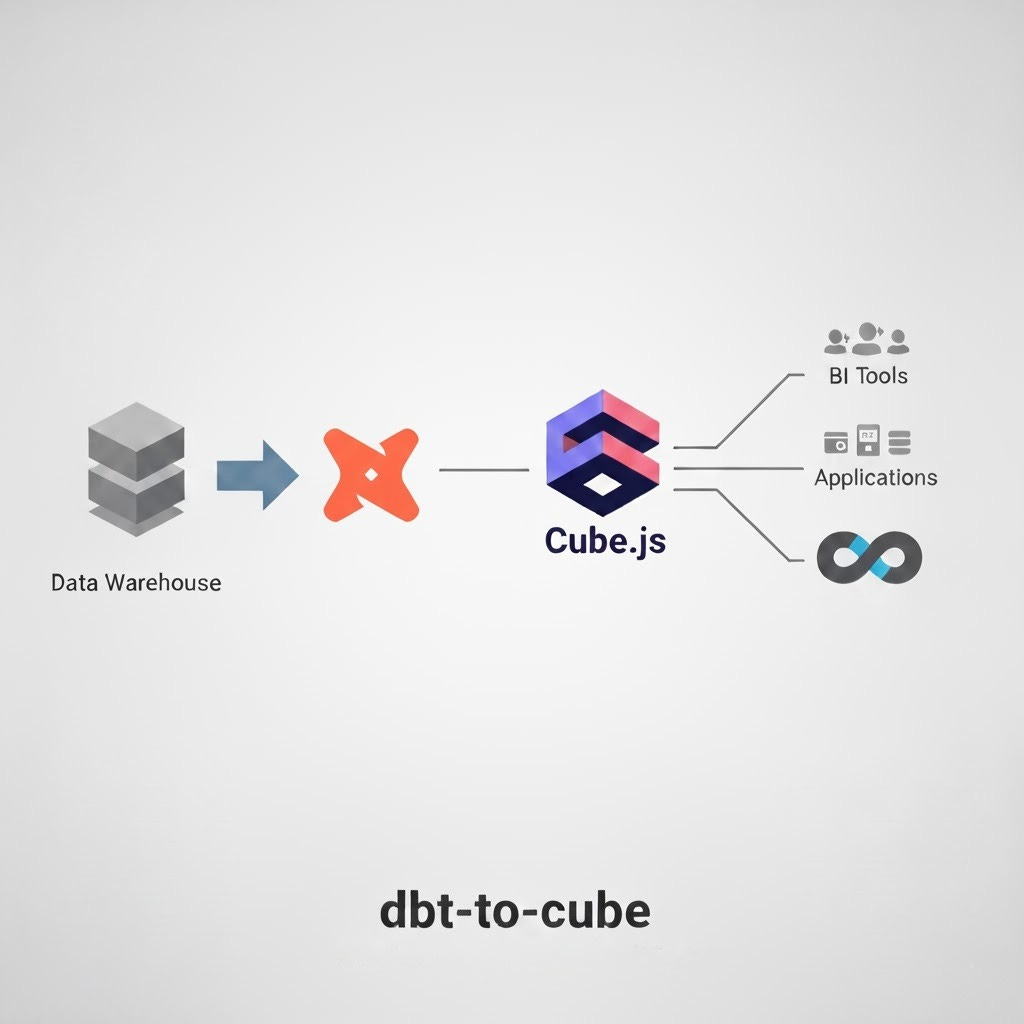

Out of curiosity (and a bit of frustration), I started tinkering with a small proof of concept to see whether dbt, Cube.js, and Superset could be wired together into a single automated flow. The result is dbt-to-cube:

https://github.com/ponderedw/dbt-to-cube

It’s very much a work in progress, but it already connects dbt → Cube.js → Superset and keeps metrics in sync without any manual steps. Sharing it here in case others are experimenting with similar setups – I’d genuinely love feedback, ideas, or contributions from anyone who gives it a try.

Quickstart

See dbt-cube-sync in action! This walkthrough demonstrates a full semantic layer pipeline—from dbt transformations to BI dashboards—without any manual metric duplication.

Already Have dbt, Cube.js, and Superset?

If your stack is already deployed, you don’t need Docker. Just install dbt-cube-sync and point it to your existing deployment:

pip install dbt-cube-sync

# Convert dbt models to Cube.js schemas

dbt-cube-sync dbt-to-cube \

--manifest /path/to/manifest.json \

--catalog /path/to/catalog.json \

--output /path/to/cube/output

# Sync Cube.js schemas to Superset

dbt-cube-sync cube-to-bi superset \

--cube-files /path/to/cube/output \

--url http://your-superset:8088 \

--username admin \

--password admin \

--cube-connection-name CubeThis workflow works with Superset, Tableau, or PowerBI, giving you full control over your production-ready semantic layer.

Starting from Scratch? Spin Up Everything Locally

If you want a full demo stack including sample data, all services run locally in Docker: PostgreSQL, dbt, Cube.js, Superset, and dbt-cube-sync.

Prerequisites

Docker & Docker Compose

Git

~10 minutes

Step 1: Clone & Start the Stack

git clone https://github.com/ponderedw/dbt-to-cube

cd dbt-to-cube

docker-compose up --buildThis will:

Initialize PostgreSQL with sample data

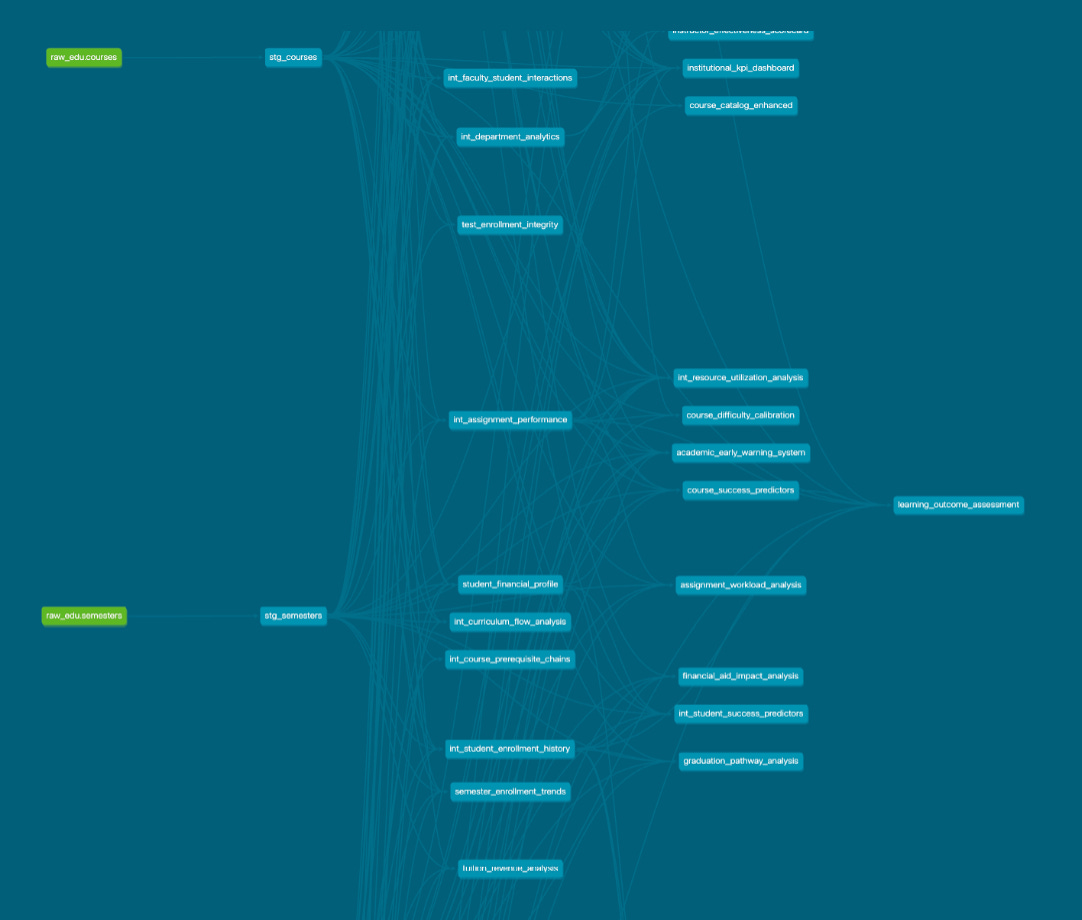

Run dbt transformations on 46 pre-built models

Start Cube.js API serving your semantic layer

Configure Superset with admin access and database connection

Automatically generate Cube.js schemas from dbt and sync them to Superset

Step 2: Explore Your Data Stack

Once the logs show “Pipeline completed successfully!”, you can access:

Superset: http://localhost:8088 — login: admin/admin

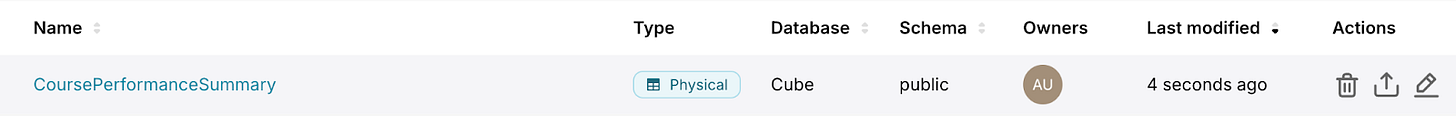

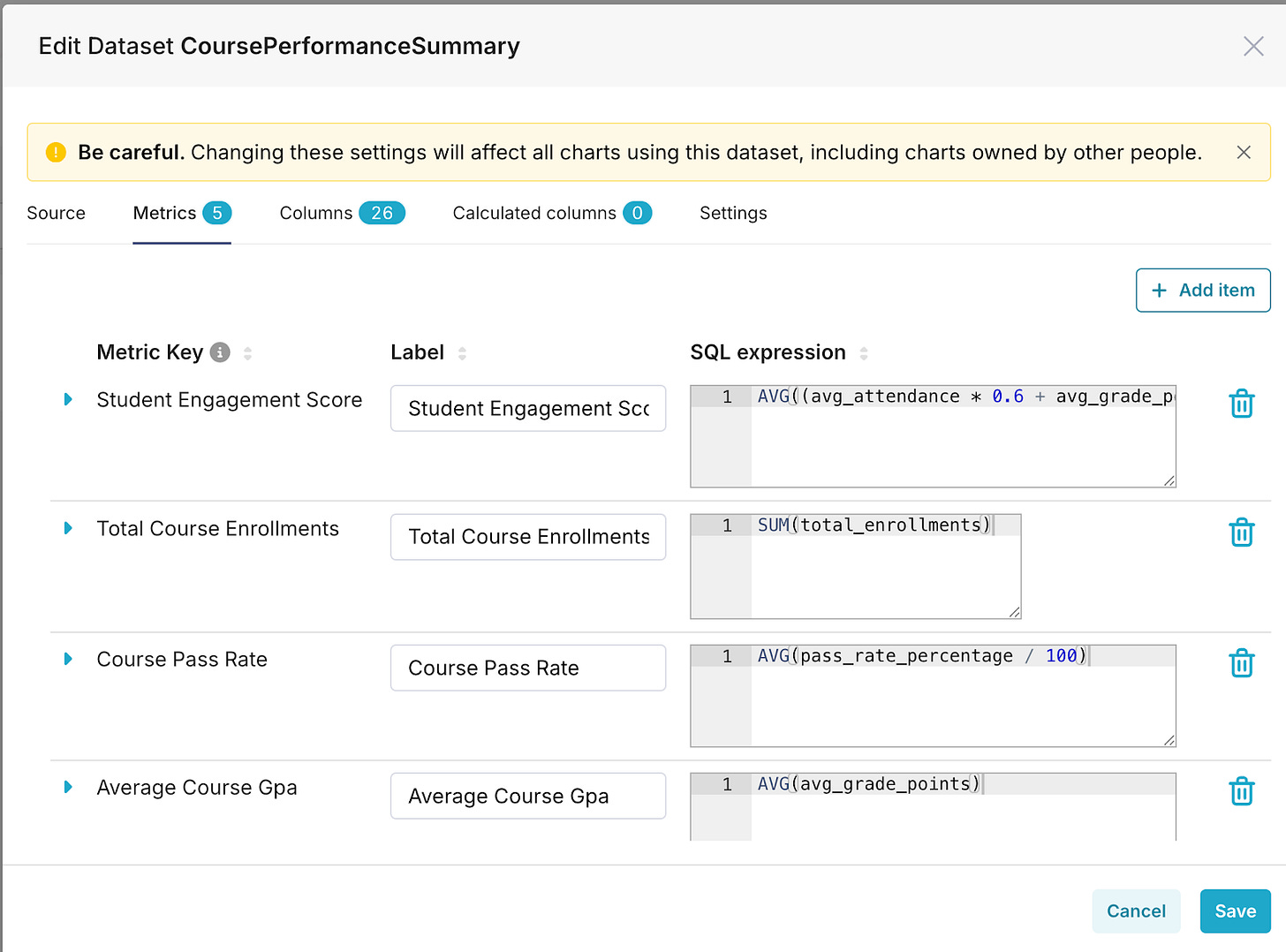

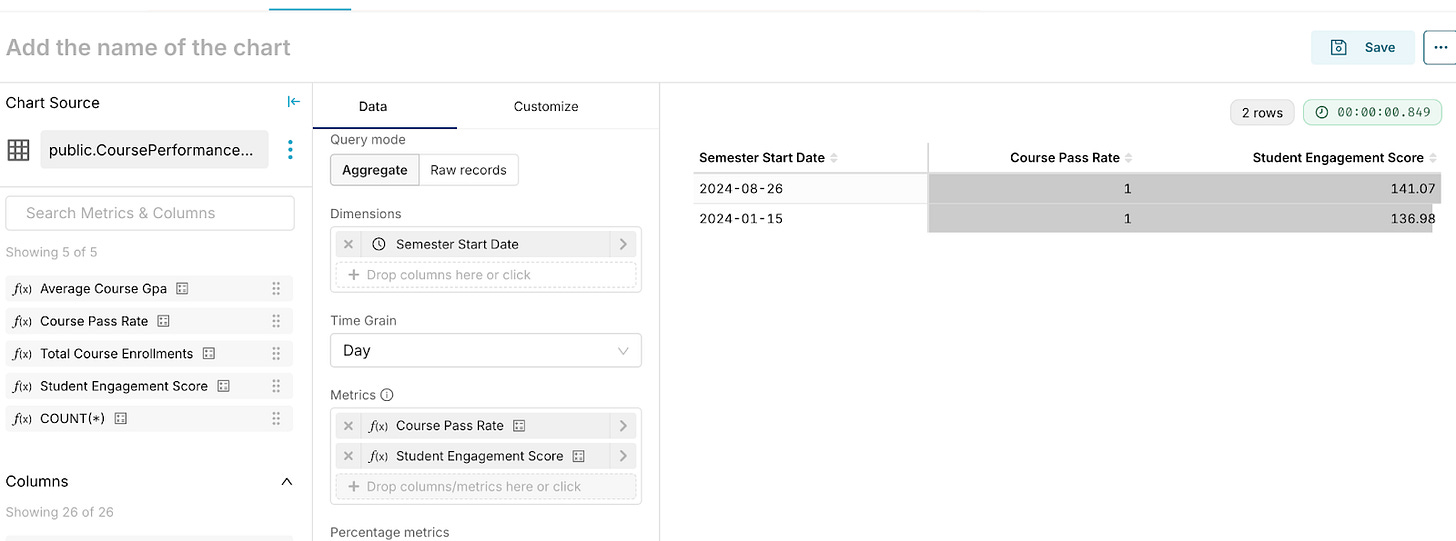

46 datasets auto-created from dbt models

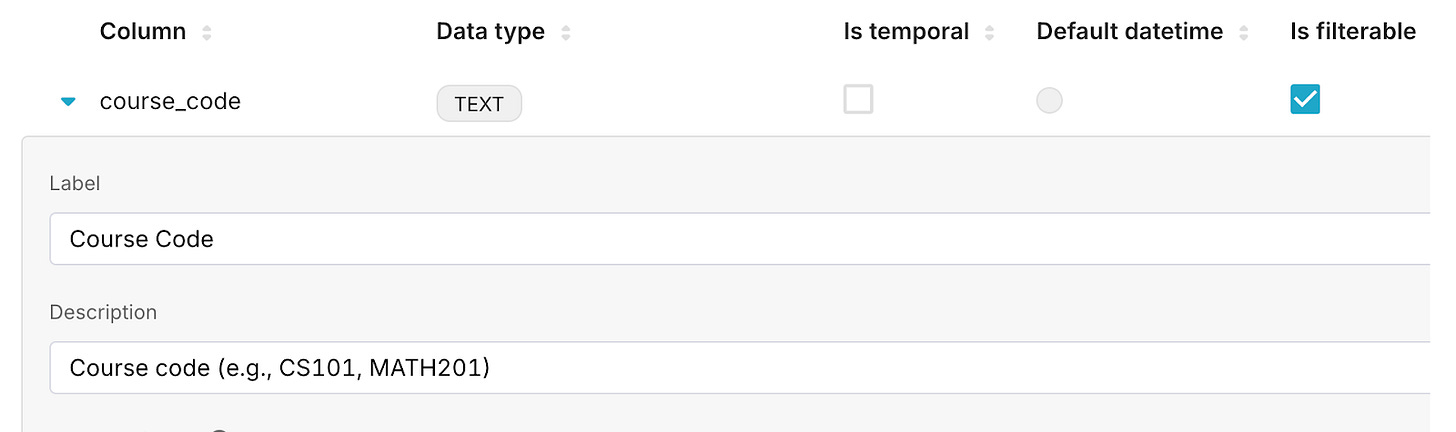

Column metadata and metrics like Average GPA and Pass Rate

dbt Docs: http://localhost:8080 — full DAG and column-level documentation

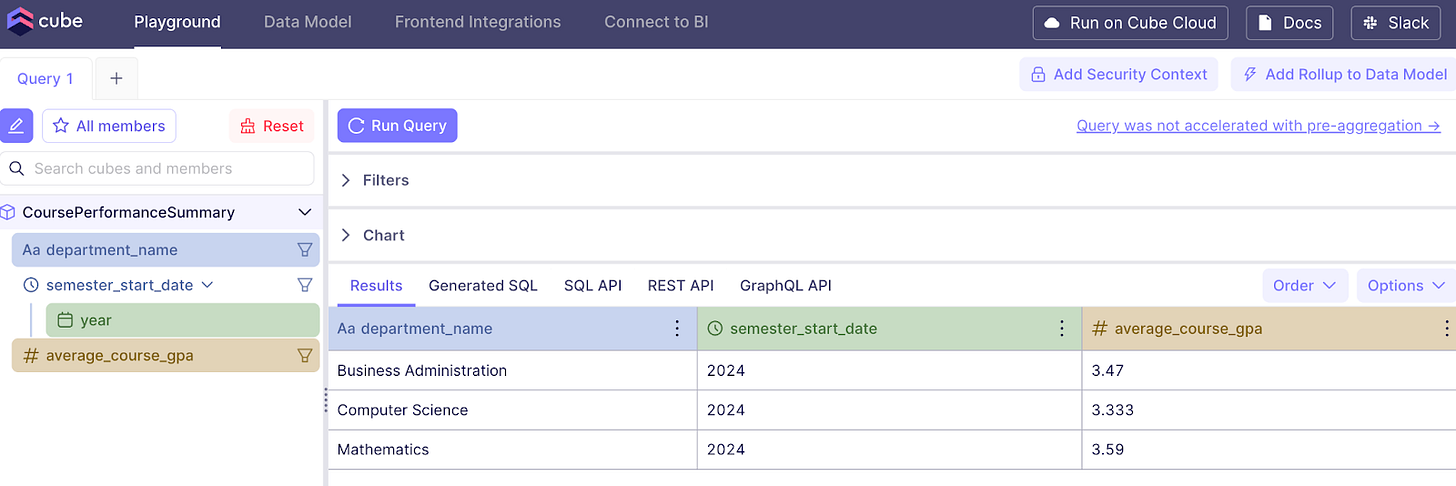

Cube.js API: http://localhost:4000 — REST and GraphQL endpoints

Step 3: Watch the Pipeline in Action

docker-compose logs -f dbt-cube-syncThe pipeline runs in three automated phases:

dbt Parsing – reads manifest.json & catalog.json, finds models, and maps dependencies

Cube.js Schema Generation – converts dbt models into Cube.js dimensions and measures

BI Tool Sync – pushes datasets and metrics to your BI tool with proper metadata

Everything happens automatically for all models—no manual intervention required.

✅ What You’ve Built

In minutes, you now have a unified semantic layer pipeline:

Single source of truth: Metrics defined once in dbt

Automatic propagation: dbt → Cube.js → BI tools

Cross-tool flexibility: Works with Superset, Tableau, PowerBI

Developer-friendly: Version-controlled, testable, and documented

Replace sample data with your warehouse and dbt models, and you have a production-ready semantic layer – no metric inconsistencies across tools.

The Takeaway

This little experiment convinced me that an automated semantic layer doesn’t have to be complicated. Define metrics once in dbt, let a small tool translate them to Cube.js, and push them into your BI tool of choice – no more re-implementing the same logic across dashboards.

If you end up trying out dbt-to-cube, I’d be really interested to hear what works, what breaks, or what you’d want it to support next. It’s still a POC, but with feedback from others running into the same problems, it could grow into something genuinely useful for the community.

Always happy to chat if you’re experimenting with semantic layers or have ideas on making this whole workflow smoother!

Hi Egor,

Very interesting approach. I was wondering why cube.js is needed, and if there could be a way to interface dbt metrics directly to AI and BI tools. I guess this approach is to keep everything open-source and not have to rely on dbt cloud Semantic Layer? Taking into account the new OSI standard (bascally dbt MetricFlow at the moment) do you think skipping cube.js is feasible?

Thanks!